Subsurface Scattering for Gaussian Splatting

SSS GS — Real-time rendering, relighting and material editing of translucent objects.

Overview

We propose photorealistic real-time relighting and novel view synthesis of subsurface scattering objects. We learn to reconstruct the shape and translucent appearance of an object within the 3D Gaussian Splatting framework. Our method decomposes the object into its material properties in a PBR like fashion, with an additional neural subsurface residual component. To do so, we leverage our newly created multi-view multi-light dataset of synthetic and real-world objects acquired in a light-stage setup. We achieve high-quality rendering results with our deferred shading approach and allow for detailed material editing capabilities. We surpass previous NeRF based methods in training and rendering speed, as well as flexibility, while maintaining high visual quality.

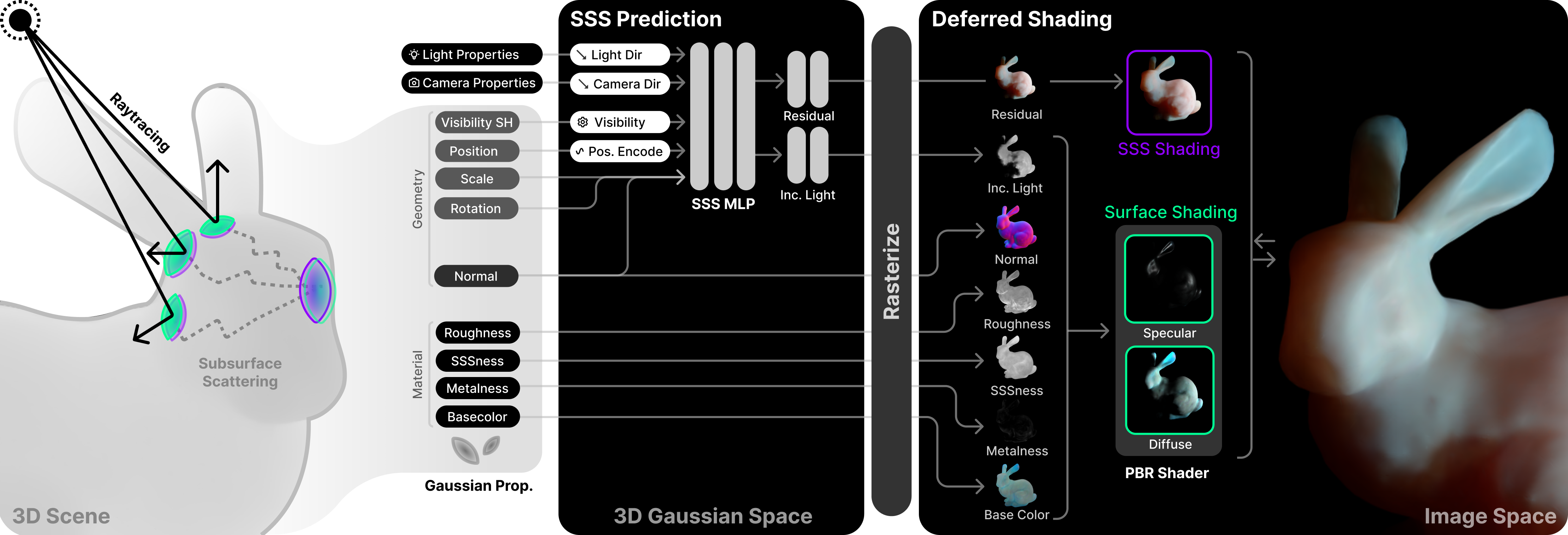

Pipeline

Our method implicitly models the subsurface scattering appearance of an object and combines it with an explicit surface appearance model. The object is represented as a set of 3D Gaussians, consisting of geometry and appearence properties. We ultilize a small MLP to evaluate the subsurface scattering residual given the view and light direction and a subset of properties for each Gaussian. Further, we evaluate the incident light for each Gaussian as a joint task within the same MLP given the visibility supervised by ray-tracing. Based on the computed properties we accumulate and rasterize each property on the image plane in a deferred shading pipeline. We evaluate the diffuse and specular color with a BRDF model for every pixel in image space and combine it with the SSS residual to get the final color of the object.

Results

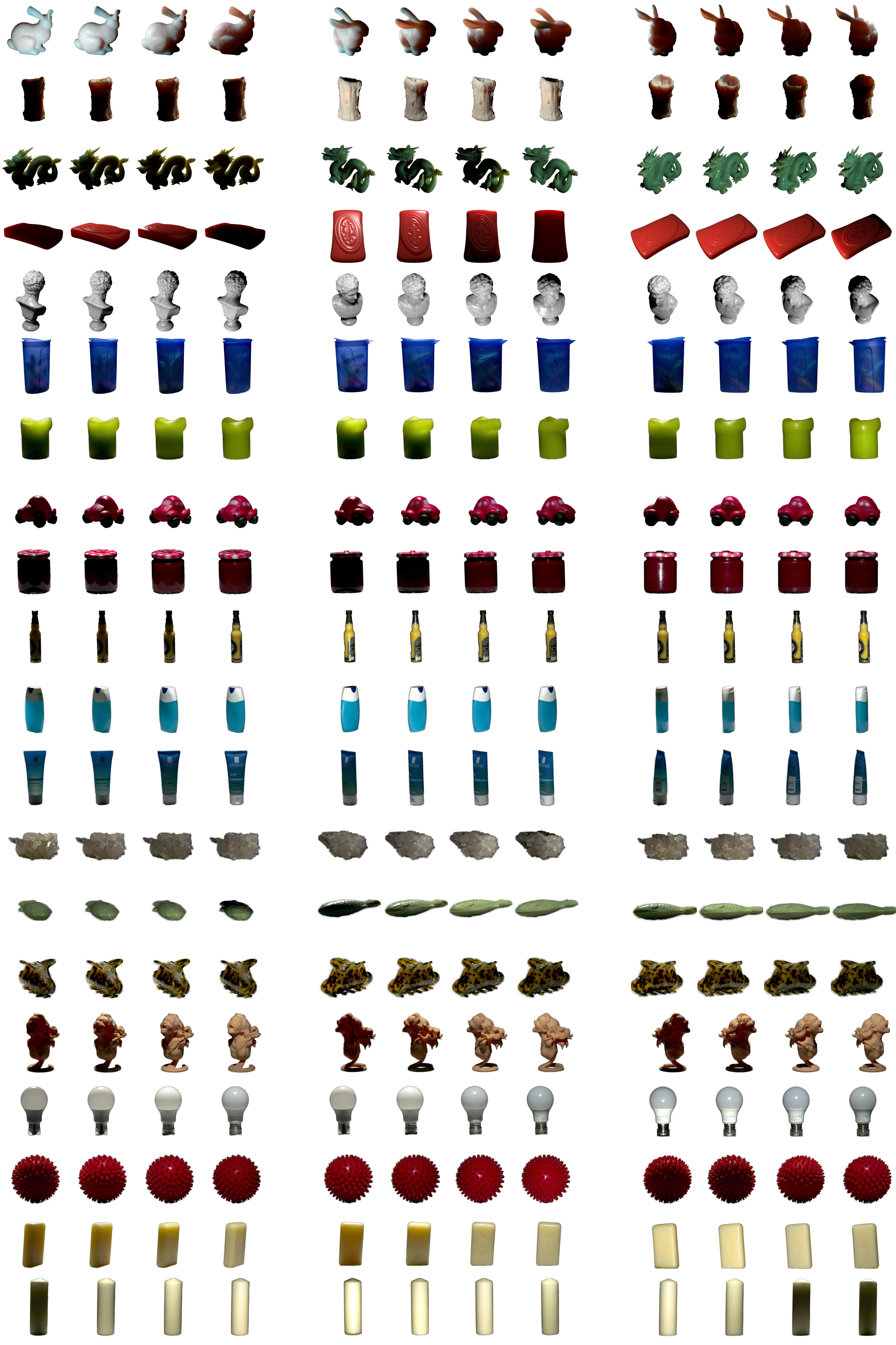

SSS GS achieves high visual quality with an average 37 PSNR at real-time rendering speeds with around 150 FPS. We show the results of our method on synthetic (top) and real-world (bottom) objects. Further, we demonstrate the decomposition, image based lighting, and editing capabilities of our method. Find detailed quantitaive results and comparison to NeRF based methods in the paper.

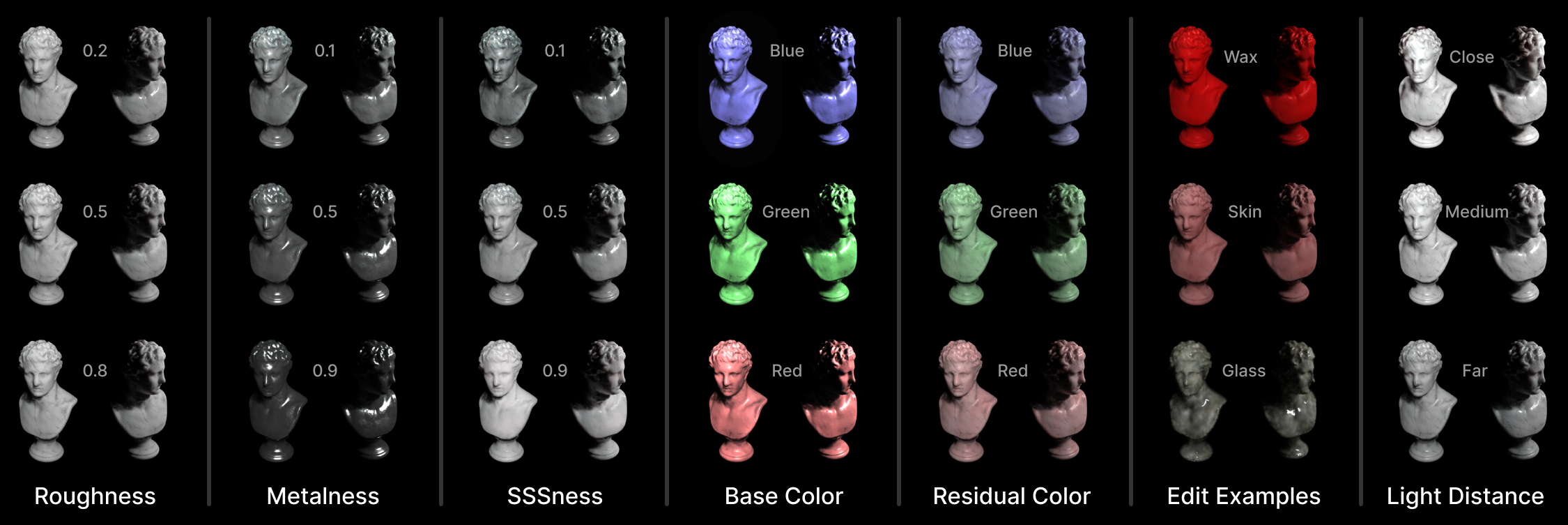

Decomposition — Due to the modeling of the object in a PBR fashion, we can decompose the object into its material properties. We show the decomposition of the object into its base color, metallness, roughness, and further its subsurfaceness. In the following we present views of the SSS residual under novel light. We showcase two synthetic (candle / statue) and two real-world (soap / tupperware) objects.

Image Based Relighting — Our method achieves image based lighting (IBL) with high visual quality. We showcase IBL with three different environment maps used on two synthetic (bunny / soap) and two real-world (candle / car) objects.

Editing — Due to the decomposition of the object we allow for detailed editing of the object. Our method facilitates adjustments of the default PBR properties and further the SSS residual, introducing editing capabilities, that surpass those available in previous methods.

Dataset

We are proud to annouce and release the first translucent object dataset of 20 distinct objects from various translucent material categories such as plastic, wax, marble, jade, and liquids. We captured the objects within a light stage setup, where 15 are real-world scans and additional 5 synthetic scenes acquired using Blender. In the following you see a subset of the >10k images captured per object with >100 light and >100 camera positions. Full dataset coming soon.

Citation

More Information

Open Positions

Interested in persuing a PhD in computer graphics?

Never miss an update

Join us on Twitter / X for the latest updates of our research group and more.

Acknowledgements

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany's Excellence Strategy – EXC number 2064/1 – Project number 390727645. This work was supported by the German Research Foundation (DFG): SFB 1233, Robust Vision: Inference Principles and Neural Mechanisms, TP 02, project number: 276693517. This work was supported by the Tübingen AI Center. The authors thank the International Max Planck Research School for Intelligent Systems (IMPRS-IS) for supporting Jan-Niklas Dihlmann and Arjun Majumdar. In cooperation with Sony Semiconductor Solutions -Europe, the authors would like to thank Mr. Zoltan Facius and Mr. Prasan Ashok Shedligeri.